Paper to Paperless:

40-Year Evolution of Knowledge Management in Midstream Integrity Engineering Paper Files

The story begins in a Linden, New Jersey field office in 1982 and embarks on a 38-year journey of witnessing slow, continuous improvement, as well as dramatic step changes in knowledge management. In this field office, all regulated documents were paper records and were stored neatly in every office and in the attic of the field office. The space was so cluttered that the four engineers and technicians in the office often bumped chairs throughout the day. This work environment was not conducive for long periods of concentration needed for preparing a DOT report or a proposed project for the Houston office.

Introduction to mainframe computing

I got transferred from that field office to the company’s main office in Houston, Texas, and was introduced to the cutting-edge technology of the day: the mainframe computer. The reality of working with this marvel of technology was quite a bit more cumbersome than expected; it seemed as though it would be faster to just type reports ourselves, rather than wait for this machine to run its course. Additionally, a whole new vocabulary of Information Technology was required to effectively communicate and collaborate with team members working with this behemoth.

The plan to better streamline and manage knowledge and records about the physical properties and operations of pipeline infrastructure consisted of gathering field data using data loggers, uploading then downloading this data along a 10,000-mile pipeline system, and finally analyzing the data to comply with the regulations. While technology was not yet ready to deliver this type of efficient communication program, the dream to have central computers talk to the field devices was born. In fact, it was forecasted to be another decade before technology could seamlessly interface with this data set and streamline operations.

Dawn of personal computers

With the advent of PCs, much more computing power could be packed into much smaller spaces, which led to tremendous increased capabilities to have a step-change in knowledge management and worker efficiency. This provided inspiration for us to build on the prior efforts and learnings with the mainframe computer. We started with taking Dbase, Lotus, and Word Star classes, learning the strange new language of computer programming and quickly started putting together a Corrosion Control Program Plan using Dbase 2.1, which was later compiled in Clipper.

Database management systems and feeding the machine

To move this project along at a faster pace, a corrosion database management system was created and coded into the company PCs. Now that computing power was available in a compact footprint, and a digital data organization system was configured, we were ready to feed the machine with data directly instead of manual repetition of effort in typing values over and over. However, once again, a key piece of the data supply chain was lagging in technological advancement.

We began testing the available data loggers that the industry had to offer, and after months of testing, none of the data loggers met our requirements. Therefore, we pushed the boundaries of technology from progressing in a slower, continuous improvement phase into step-change in capability by getting a leading cathodic protection manufacturer to design and develop a data logger based on our initial field requirements and computerized data management system design. This piece of equipment took about a year to develop and it was called the Tricorder, which I thought was an impressive name at that time. In fact, there are still some of them around that are working today since the mid-80s. This tool was truly ruggedized with the smarts and capabilities that the other products of the day simply did not have. The Tricorder was set up to work with an internal Lotus spreadsheet so data could easily be uploaded or downloaded to a computer, which made a tremendous improvement in efficiency, as well as a decrease in typos, errors, and omissions.

Initial lessons in scalability and supportability

Now that there was a proven and verified method to auto-collect data, process it, store it and retrieve it with unparalleled efficiency, the next step was to move from a proof of concept to a full roll-out to new desktop computers, which were in the Division Offices around the U.S. and in the Houston corporate office. We quickly learned that building a system that others can understand and interact with efficiently is much more challenging than building a computer system that one or two people can find intuitive. Additionally, flexibility in handling exceptions found in different operating environments added more burden to the task of scaling and supporting the solution.

We knew we were on the right track. After working on the corrosion data management program for about six months, thinking we were ready for primetime, we were delayed by getting lost in our menus to the extent that we could not document our way out. Our boss tested the program and mentioned that he could not run it. As a result, his decision was that it would not go out to the field until the program worked with one keystroke. Alas, after a few more classes on programming, we quickly learned that there was another evolution of the people-process-technology trifecta to come before our dream could be realized.

Portability of computers helps justify additional investment

We found that a worthwhile business case could be made to undergo a major rewrite of the program with the promise of streamlining the individual field offices located about every 50 miles along the pipeline corridor. At this time, the Compaq Portable 1 (weighing approximately 45 lbs) arrived on the market just in time using dual 5 ¼ in. floppy disks. One floppy was used for starting the computer and the other was running a second software program.

Through continuous improvement in software design and learning lessons in menu architecture, the program was rewritten a second time. It took close to a year before letting our administrative assistant test it before handing it over to the boss for his review, and it was a success for both. Now, the only thing that was left was to develop a communication program to upload and download the data from the computer to the data logger and back.

Data latency and communication speed

A third-party consultant bid the job to create the data migration protocols for a few hundred dollars and we were soon cruising along at a blistering 4800 baud rate, compared to the 300 baud rate communication speed at the time over telephone wires. In today’s terms, 300 baud rate equates to 100-200 Mbps, this means taking a one- or two-page document could take anywhere from 30 minutes to an hour to transmit compared to microseconds today. The paperless dream was getting nearer to reality. We soon found out there were bigger data uploads to be transferred to the PC, and performance needed to be improved to 9600 baud in order to be effective.

Change management

A key stumbling block that derails many efforts to implement step-change in performance is change management. Technology changed again, providing us a computer and computer program that was portable and could affect field offices, division offices, and corporate offices across the country, and we had the task of training the field personnel, which consisted of approximately 45 technicians and engineers.

First, we had to teach them about the Portable 1 computer, DOS, Lotus, Word Star, and the Corrosion Database program, along with the data loggers. After weeks of training, we finally completed the first major test of gathering field data using data loggers, uploading and downloading to the computer, and analyzing this data at each test station along the pipeline with no hiccups.

Having our first success with options in the reports to find data that was out of compliance, with the ability to check for unit conversions not working properly, and had other features to help submit the data in a timely manner, all with a single keystroke to get in and out of the menus.

However, it took the field personnel over a year to gain confidence in the data loggers and to understand the computer programs before they gave up on their paper forms and having to record it twice. In the meantime, we were generating twice the paperwork – once with the old forms and now with the new computerized system printing new reports. This was part of growing up with new technology and learning to adapt to it.

Aside from the people gaining confidence in the new system and laying down the old ways of working with data loggers, telecommunications and other ancillary business practices threw a kink in the change management process. Then, transferring data from field offices to the Division Offices and to the Houston office required mailing the copies of the floppies to each location. At this time, the technology was not ready to start transferring data through our private 2000-mile communication microwave system.

Despite the advancement in technology, the people and process cogs of the wheel were not ready for the more advanced developed software, which saved data on a floppy disk and could be retrieved on an as per need basis. Until the process was fully adopted and implemented across the board, another major roadblock was that this new, faster way of working was actually double-work, since printing all reports still mimicked the older paper and file cabinet system used in field and main offices. Therefore, we continued to kill more trees. It is difficult to get away from old entrenched habits. In the end, we still printed the new reports using this new system.

The VP of Operations asked us “When are we going to see the cost of paper start going down in the company?” We truthfully could not answer him.

The speedier pace of innovation

This in-house developed program lasted from the mid-1980s to 2002, representing over 17 years in existence, and only just a few enhancements. Today, businesses change out systems every few years and Information Technology programs often view five years as the viable life-expectancy of a solution. In 2002, the company moved to a commercialized program that linked all the computers together to bring the data into the Houston office. Lotus has since been replaced with Excel and Word Star was replaced by Microsoft Word. Furthermore, Dbase was replaced by Access.

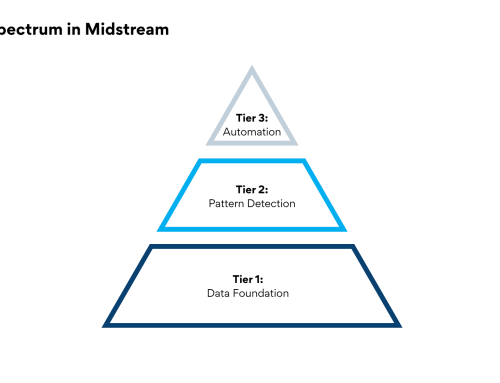

Technical Toolboxes (TT) started in the late 1990s, offering the original Pipeline Toolbox. In the last three years, TT has been working on truly integrating modules and applications related to Geographic Information Systems (GIS) with its HUBPL integrated data environment to enable quicker, better, data-driven decisions through workflow automation with fewer technical resources being burdened with paper and data processes.

How does this relate to the paperless dream? By using these same modules and applications in an integrated solution, the HUBPL platform allows the user to overlay the analysis and data on a map digitally versus printing it. This makes it truly live with the ability to analyze down to any ‘use-case’ needed by the user. In addition, it analyzes data quickly with results literally with one click of a button. Search routines take no time; whereas it would take days and weeks to go through those old file cabinets and boxes in the attic.

As with all step changes in technology, there are early-adopters, fast followers, and laggards. As the industry faces additional downward pressure brought on by pandemics and climate regulations, we find ourselves in another round of pushing the limits of how quickly workers can take on changes in how people and processes interact with technology. Luckily, Technical Toolboxes’ new customer success program greatly aids in enabling measurable, meaningful change in engineering performance.

###

About the Author

About the Author

Mr. Joe Pikas has 54 years’ experience in pipeline construction, operations, corrosion, risk and integrity in the oil, gas, water, and nuclear industries. He retired from a large gas company in 2002 and is still working with Technical Toolboxes as an engineering subject matter expert for their technical software applications. He has been involved with the continued development of the Pipeline Toolbox, RSTRENG, HDD and AC Mitigation software programs. He is a true believer in advancing technologies to move beyond the norm. His consulting company is called Beyond Corrosion Consultants.

About Technical Toolboxes

Technical Toolboxes is a leading provider of integrated desktop and cloud-based pipeline engineering software, online resources, and specialized training for pipeline engineering professionals around the world. Our fit-for-purpose pipeline engineering software platform will help you reduce risk, lower total cost of operations, and accelerate project schedules. Hundreds of companies rely on our certified, industry-standard technology to enhance their pipeline engineering performance. Compare the performance that Technical Toolboxes technology, consulting, and training can make in pipeline engineering workflows and you will see a measurable difference.

As pipeline engineering professionals embrace digital change, Technical Toolboxes’ legacy applications are evolving from manual calculators into sophisticated, integrated holistic analysis tools that enable users to make efficient, accurate decisions through a collaboration feature. The recently released Pipeline HUBPL platform automates integration and analyses for insights into infrastructure design and operational fitness. It connects a library of engineering standards and tools to users’ data across a pipeline lifecycle. Integrated maps allow geospatial analysis, visual reconnaissance of existing databases, and leveraging of disparate geographical information systems (GIS) data components. Essentially, with HUBPL automation and integration, users can also convert weeks to hours. If the availability of today’s innovative technology were available in 1985, it would have had a truly integrated solution that was sorely needed back then.